티스토리 뷰

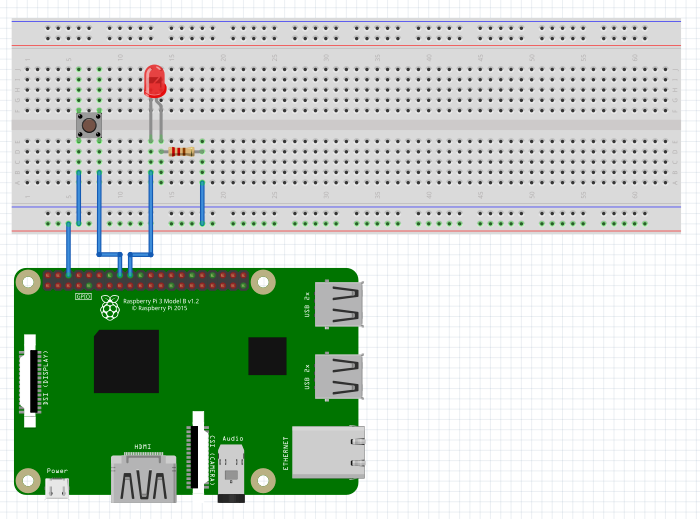

1. 버튼 누르면 led 켜지게 하여 기본 틀 구성하기

https://developmentdiary.tistory.com/482

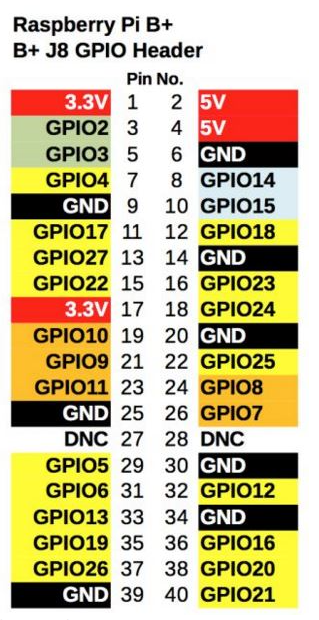

라즈베리파이 led 버튼

------------------------------------------------------------------------------------------------------------------- 라즈베리파이 40개의 커넥터 pin 설명 https://apluser.tistory.com/7 3.3v , 5v파워 (+..

developmentdiary.tistory.com

<1. 버튼(23)눌리면 led켜는 코드>

# -*- coding:utf-8 -*- #한글 입력

#버튼 지속하기

import RPi.GPIO as GPIO #gpio라이브러르

import time #sleep사용

GPIO.setmode(GPIO.BCM) #gpio 모드 셋팅

GPIO.setup(23, GPIO.IN, pull_up_down=GPIO.PUD_UP) #Button 입력 GPIO23

GPIO.setup(24, GPIO.OUT) #LED 출력GPIO24

try:

a=False

while True:

button_state = GPIO.input(23) #버튼 상태 확인

if button_state == False: #눌러진상태면

if a:

a=False

else:

a=True

if a:

GPIO.output(24, True) #출력

else:

GPIO.output(24, False)

time.sleep(0.15)

except KeyboardInterrupt: #ctrl-c 누를시

GPIO.cleanup()실행 폴더로 이동하여

python LED_Button2.py

<2. +구글 스피치 API>

https://developers.google.com/assistant/sdk/guides/service/python/extend/handle-device-commands

Handle Commands | Google Assistant SDK | Google Developers

Handle Commands Follow these instructions to execute custom code on your device in response to commands from the Google Assistant. Run the sample Now that you defined a trait and updated the model, check to make sure the Google Assistant is sending back an

developers.google.com

1 구글 어시스턴스 소스를 다운받는다.

git clone https://github.com/googlesamples/assistant-sdk-python

2 설치

#가상환경 나갔다가 다시 실행하기

> deactivate

> sudo reboot

#가상 환경 env로 접속

> source ~/env/bin/activate

#GPIO 인스톨

> pip install RPi.GPIO(오류) buf = arr.tostring()

오류 경로로 이동하여 buf = arr.tostring() 이것을 buf = arr.tobytes() 으로 바꿔줘야한다 파이선 버전때문에 생기는 오류

>해결

pip3 install pathlib2

$ pip install click

sudo pip install grpcio

https://pypi.org/project/grpcio/

grpcio

HTTP/2-based RPC framework

pypi.org

import RPi.GPIO as GPIO

device_handler = device_helpers.DeviceRequestHandler('본인기기아이디')

GPIO.setmode(GPIO.BCM)

GPIO.setup(24, GPIO.OUT, initial=GPIO.LOW)

@device_handler.command('action.devices.commands.OnOff')

def onoff(on):

if on:

logging.info('Turning device on')

GPIO.output(24, 1)

else:

logging.info('Turning device off')

GPIO.output(24, 0)이걸 붙여넣으라고 했다.(google : IoT 버튼 제어)

하지만 나는 엔터키 누르는 이부분을

> 버튼을 누르면 인식되고 대화가 끝나면 다시 불이꺼진채로 대기한다.

#원래코드

with SampleAssistant(lang, device_model_id, device_id,

conversation_stream, display,

grpc_channel, grpc_deadline,

device_handler) as assistant:

# If file arguments are supplied:

# exit after the first turn of the conversation.

if input_audio_file or output_audio_file:

assistant.assist()

return

# If no file arguments supplied:

# keep recording voice requests using the microphone

# and playing back assistant response using the speaker.

# When the once flag is set, don't wait for a trigger. Otherwise, wait.

wait_for_user_trigger = not once

while True:

if wait_for_user_trigger:

continue_conversation = assistant.assist()

# wait for user trigger if there is no follow-up turn in

# the conversation.

wait_for_user_trigger = not continue_conversation

# If we only want one conversation, break.

if once and (not continue_conversation):

print("end")

break이렇게 바꿨다.

> 1차 코드: 버튼 누르면 음성인식 시작 두번누르면 전송

#바뀐코드

GPIO.setmode(GPIO.BCM) #gpio 모드 셋팅

GPIO.setup(23, GPIO.IN, pull_up_down=GPIO.PUD_UP) #Button 입력 GPIO23

GPIO.setup(24, GPIO.OUT) #LED 출력GPIO24

with SampleAssistant(lang, device_model_id, device_id,

conversation_stream, display,

grpc_channel, grpc_deadline,

device_handler) as assistant:

# If file arguments are supplied:

# exit after the first turn of the conversation.

if input_audio_file or output_audio_file:

assistant.assist()

return

# If no file arguments supplied:

# keep recording voice requests using the microphone

# and playing back assistant response using the speaker.

# When the once flag is set, don't wait for a trigger. Otherwise, wait.

wait_for_user_trigger = not once

while True:

if wait_for_user_trigger:

#click.pause(info='Press Enter to send a new request...')

a=False

button_state = GPIO.input(23) #버튼 상태 확인

if button_state == False: #눌러진상태면

if a:

a=False

else:

a=True

if a:

GPIO.output(24, True) #출력

continue_conversation = assistant.assist()

print("assist00000")

# wait for user trigger if there is no follow-up turn in

# the conversation.

wait_for_user_trigger = not continue_conversation

print("not continue")

a= False

else:

GPIO.output(24, False)

print("i'm nothing")

time.sleep(0.15)

# If we only want one conversation, break.

if once and (not continue_conversation):

print("end")

break

>2차 코드 : 바로 시작 / 음성인식 끝나면 /질문/ 완료 버튼 or 다시 음성인식 버튼

sound_pin = 18

led_pin =24

<버튼>

button_pin = 16 : right

button_pin = 12 : left

(가상환경 실행 코드)

source ~/env/bin/activate

#!/usr/bin/env python

# Copyright 2017 Google Inc. All Rights Reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

"""Google Cloud Speech API sample application using the streaming API.

NOTE: This module requires the additional dependency `pyaudio`. To install

using pip:

pip install pyaudio

Example usage:

python transcribe_streaming_mic.py

"""

# [START speech_transcribe_streaming_mic]

from __future__ import division

import os

import re

import sys

from google.cloud import speech

import pyaudio

from six.moves import queue

import RPi.GPIO as GPIO

import time

from playsound import playsound

os.environ["GOOGLE_APPLICATION_CREDENTIALS"] = \

"*************본인 인증서 key .json"

# Audio recording parameters

RATE = 16000

CHUNK = int(RATE / 10) # 100ms

class MicrophoneStream(object):

"""Opens a recording stream as a generator yielding the audio chunks."""

def __init__(self, rate, chunk):

self._rate = rate

self._chunk = chunk

# Create a thread-safe buffer of audio data

self._buff = queue.Queue()

self.closed = True

def __enter__(self):

self._audio_interface = pyaudio.PyAudio()

self._audio_stream = self._audio_interface.open(

format=pyaudio.paInt16,

# The API currently only supports 1-channel (mono) audio

# https://goo.gl/z757pE

channels=1,

rate=self._rate,

input=True,

frames_per_buffer=self._chunk,

# Run the audio stream asynchronously to fill the buffer object.

# This is necessary so that the input device's buffer doesn't

# overflow while the calling thread makes network requests, etc.

stream_callback=self._fill_buffer,

)

self.closed = False

return self

def __exit__(self, type, value, traceback):

self._audio_stream.stop_stream()

self._audio_stream.close()

self.closed = True

# Signal the generator to terminate so that the client's

# streaming_recognize method will not block the process termination.

self._buff.put(None)

self._audio_interface.terminate()

def _fill_buffer(self, in_data, frame_count, time_info, status_flags):

"""Continuously collect data from the audio stream, into the buffer."""

self._buff.put(in_data)

return None, pyaudio.paContinue

def generator(self):

while not self.closed:

# Use a blocking get() to ensure there's at least one chunk of

# data, and stop iteration if the chunk is None, indicating the

# end of the audio stream.

chunk = self._buff.get()

if chunk is None:

return

data = [chunk]

# Now consume whatever other data's still buffered.

while True:

try:

chunk = self._buff.get(block=False)

if chunk is None:

return

data.append(chunk)

except queue.Empty:

break

yield b"".join(data)

############################################################################3

############################################################################

#여기부터중요

def listen_print_loop(responses):

"""Iterates through server responses and prints them.

The responses passed is a generator that will block until a response

is provided by the server.

Each response may contain multiple results, and each result may contain

multiple alternatives; for details, see https://goo.gl/tjCPAU. Here we

print only the transcription for the top alternative of the top result.

In this case, responses are provided for interim results as well. If the

response is an interim one, print a line feed at the end of it, to allow

the next result to overwrite it, until the response is a final one. For the

final one, print a newline to preserve the finalized transcription.

"""

global _data

num_chars_printed = 0

for response in responses:

if not response.results:

continue

# The `results` list is consecutive. For streaming, we only care about

# the first result being considered, since once it's `is_final`, it

# moves on to considering the next utterance.

result = response.results[0]

if not result.alternatives:

continue

# Display the transcription of the top alternative.

transcript = result.alternatives[0].transcript

# Display interim results, but with a carriage return at the end of the

# line, so subsequent lines will overwrite them.

#

# If the previous result was longer than this one, we need to print

# some extra spaces to overwrite the previous result

overwrite_chars = " " * (num_chars_printed - len(transcript))

if not result.is_final:

sys.stdout.write(transcript + overwrite_chars + "\r")

sys.stdout.flush()

num_chars_printed = len(transcript)

else:

# results {

# alternatives {

# transcript: "Let\'s go"

# confidence: 0.92365956

# }

# is_final: true

# result_end_time {

# seconds: 1

# nanos: 950000000

# }

# language_code: "ko-kr"

# }

# total_billed_time {

# seconds: 15

# }

print(transcript + overwrite_chars)

# Exit recognition if any of the transcribed phrases could be

# one of our keywords.

if re.search(r"\b(exit|quit)\b", transcript, re.I):

print("Exiting..")

break

_data=transcript + overwrite_chars

print("_data"+_data)

break

num_chars_printed = 0

def main():

#sound

playsound("seach_voice.mp3")

light()

# See http://g.co/cloud/speech/docs/languages

# for a list of supported languages.

language_code = "ko-KR" # a BCP-47 language tag

client = speech.SpeechClient()

config = speech.RecognitionConfig(

encoding=speech.RecognitionConfig.AudioEncoding.LINEAR16,

sample_rate_hertz=RATE,

language_code=language_code,

)

streaming_config = speech.StreamingRecognitionConfig(

config=config, interim_results=True

)

with MicrophoneStream(RATE, CHUNK) as stream:

audio_generator = stream.generator()

requests = (

speech.StreamingRecognizeRequest(audio_content=content)

for content in audio_generator

)

responses = client.streaming_recognize(streaming_config, requests)

# Now, put the transcription responses to use.

listen_print_loop(responses)

# print("문장받아왔음,res")

melody()

light()

#button

print("button click")

playsound("button-voice.mp3")

#event1

button1_state = False

button1_state_change = False

def button1Pressed(channel):

print("1")

# #23 Right 1

# if complete:

# exit()

# else :

# main()

button2_state = False

button2_state_change = False

def button2Pressed(channel):

print("2")

#17 Left 2

main()

def melody():

sound_pin = 18

GPIO.setup(sound_pin,GPIO.OUT)

#pwm(핀번호, 헤르쯔 ##)

#소리감도 0-100 %

pwm = GPIO.PWM(sound_pin,1000.0)

pwm.start(0)

#sound

melody = [262,294,330,349,392,440,494,523]

for note in range(0,6):

pwm.ChangeFrequency(melody[note])

pwm.ChangeDutyCycle(50)

time.sleep(0.1)

pwm.ChangeDutyCycle(0)

on = False

def light():

print("light")

#turn on / off

global on

led_pin = 24

GPIO.setmode(GPIO.BCM)

#light on

GPIO.setup(led_pin, GPIO.OUT)

on = True if not on else False

if on:

GPIO.output(led_pin, True)

else :

GPIO.output(led_pin, False)

if __name__ == "__main__":

try:

# init()

button1_pin = 16

button2_pin = 12

GPIO.setwarnings(True)

GPIO.setmode(GPIO.BCM)

GPIO.setup(button1_pin, GPIO.IN, pull_up_down=GPIO.PUD_UP) #Button1 입력 GPIO16

GPIO.setup(button2_pin, GPIO.IN, pull_up_down=GPIO.PUD_UP) #Button3 입력 GPIO4

# GPIO.remove_event_detect(button1_pin)

# GPIO.remove_event_detect(button2_pin)

# GPIO.add_event_detect(button1_pin, GPIO.RISING, callback=button1Pressed)

GPIO.add_event_detect(button2_pin, GPIO.RISING ,callback=button2Pressed)

main()

while True:

GPIO.wait_for_edge(button1_pin, GPIO.FALLING)

print("Button pressed! save",_data)

except KeyboardInterrupt:

# exit with CTRL+C

print("CTRL+C used to end Program")

finally:

GPIO.cleanup()

<3.GPIO 오류>

- GPIO는 순서가 중요하고

- global로 변수 주면 오류나고

- 기능을 묶어 하나의 함수 안에 관리해주는게 가장 좋다. 필요할때마다 호출해서 사용한다.

- 버튼을 이벤트에 등록해서 쓸때는 변수 선언하는 것처럼 변수 아래에 이벤트 선언해놓고

- 버튼 핀 셋팅은 up 일때가 잘 됐고

GPIO.setup(button1_pin, GPIO.IN, pull_up_down=GPIO.PUD_UP)

- 이벤트 함수에서는 clballck = 함수이름 이런 형식으로 작성해야 오류가 안 났다

GPIO.add_event_detect(button2_pin, GPIO.RISING ,callback=button2Pressed)

- 또 wait_for_edge 함수를 알았는데, 버튼이 눌릴 때 까지 기다려준다. 반응이 오면 다음으로 넘어간다

while True:

GPIO.wait_for_edge(button1_pin, GPIO.FALLING)

print("Button pressed! save",_data)

- 그리고 GPIO 신호 초기화 잘 안 해주면 관련오류가 계속난다.

GPIO.cleanup() # 이거 꼭 잘해주고

GPIO.setwarnings(True) #이거 대부분 사람들 False로 하던데.. 잘 모르겠다

GPIO.remove_event_detect(button1_pin) # 필수는 아님..

'Iot > 라즈베리파이' 카테고리의 다른 글

| 라즈베리파이 _OpenCV 설치하기 (0) | 2022.03.08 |

|---|---|

| 라즈베리파이_google Speech to Text (0) | 2022.03.07 |

| 라즈베리파이_Mac에 Putty 설치_microsoft remote desktop (0) | 2022.03.05 |

| 라즈베리파이_Google : Cloud Speech to Text API (1) | 2022.03.04 |

| 라즈베리파이_협업하기 (0) | 2022.03.03 |

- Total

- Today

- Yesterday

- Unity

- MCP

- krea

- OpenClaw

- Python

- node.js

- VR

- sequelize

- 4d guassian splatting

- opencv

- 4dgs

- 유니티

- AI

- Arduino

- three.js

- houdini

- 라즈베리파이

- Java

- opticalflow

- 후디니

- MQTT

- Express

- colab

- DeepLeaning

- CNC

- TouchDesigner

- docker

- Midjourney

- RNN

- VFXgraph

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |